Consensus

Use consensus scores to evaluate annotation quality. Assign the same item and annotation task to several annotators, then use consensus scores to compare their work.

To compute the consensus scores among different folders:

consensus_df = sa.consensus(

project = "Project Name",

folder_names = ["Team 1", "Team 2", "Team 3"],

annotation_type = 'bbox')

The following function call exports the listed folders and computes the consensus scores between matching images. If the data is already exported, provide export_root with:

consensus_df = sa.consensus(

project = "Project Name",

folder_names = ["Team 1", "Team 2", "Team 3"],

export_root = "./exports",

annotation_type = "bbox")

Note that consensus scores are computed for all matching images between specific folders.

If the computation is relevant for a specific list of images, provide image_list with:

consensus_df = sa.consensus(

project = "Project Name",

folder_names = ["Team 1", "Team 2", "Team 3"],

export_root = "./exports",

image_list = ["image1.png", "image10.png", "image100.png"],

annotation_type = "bbox")

The returned DataFrame lists all the annotated instances with their corresponding metadata and consensus scores:

| instanceId | className | folderName | score | creatorEmail | imageName |

|---|---|---|---|---|---|

| 0 | car | Team 1 | 0.815302 | [email protected] | berlin1.png |

| 0 | car | Team 2 | 0.822203 | [email protected] | berlin1.png |

| 0 | car | Team 3 | 0.773299 | [email protected] | berlin1.png |

The show_plots parameter returns the following analytics plots:

consensus_df = sa.consensus(

project = "Project Name",

folder_names = ["Team 1", "Team 2", "Team 3"],

export_root = "./exports",

image_list = ["image1.png", "image10.png", "image100.png"],

annotation_type = "bbox",

show_plots = True)

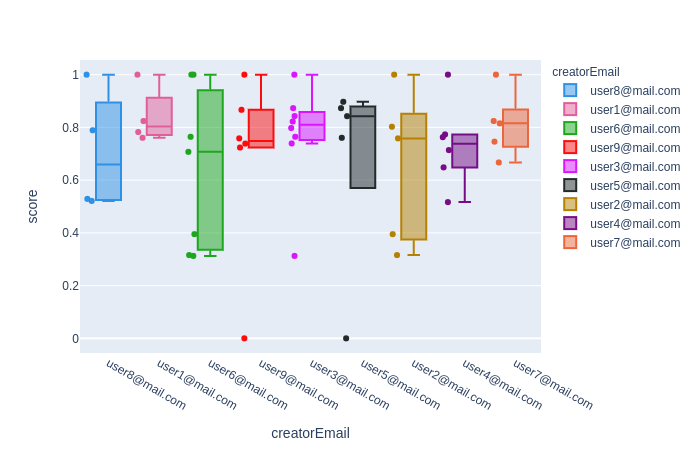

This is the box plot of consensus scores of each annotator:

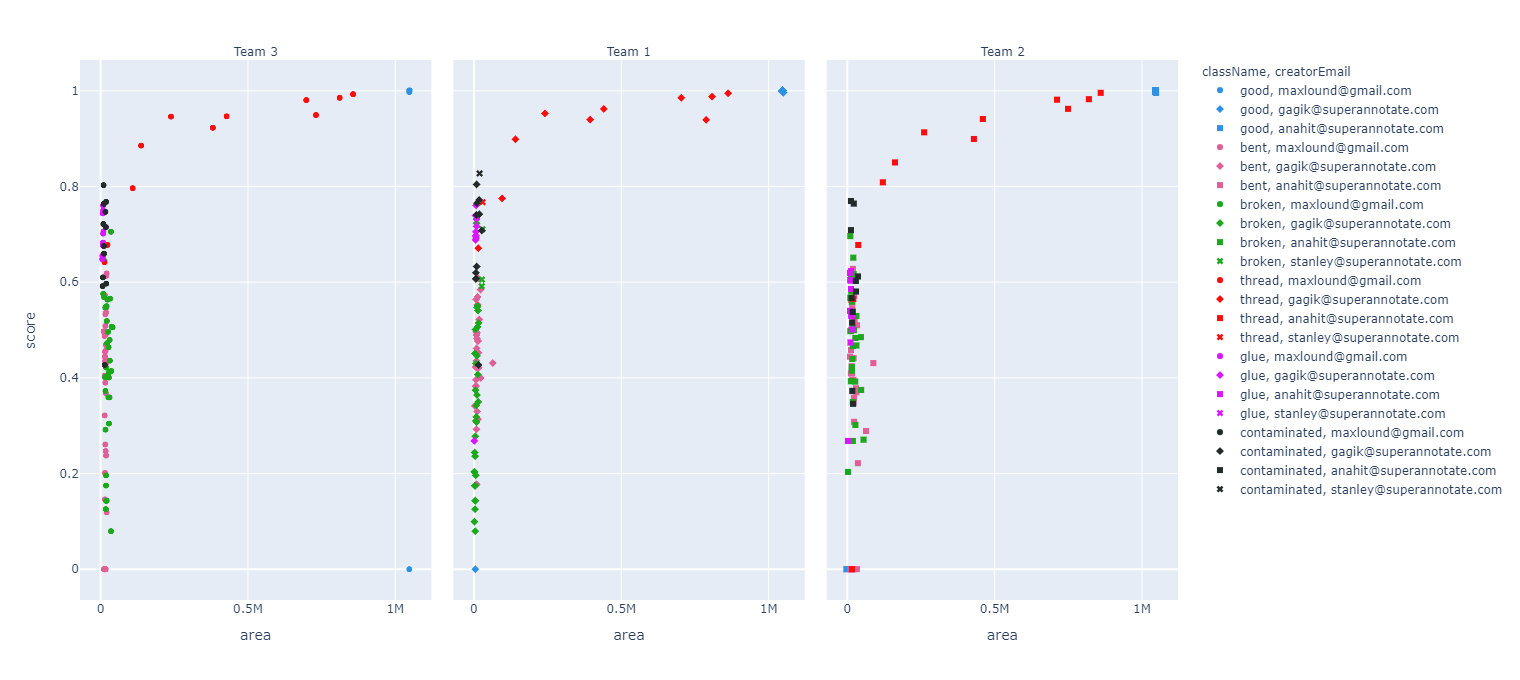

The scatter plot of consensus score vs. the instance area of each folder:

Updated 5 months ago