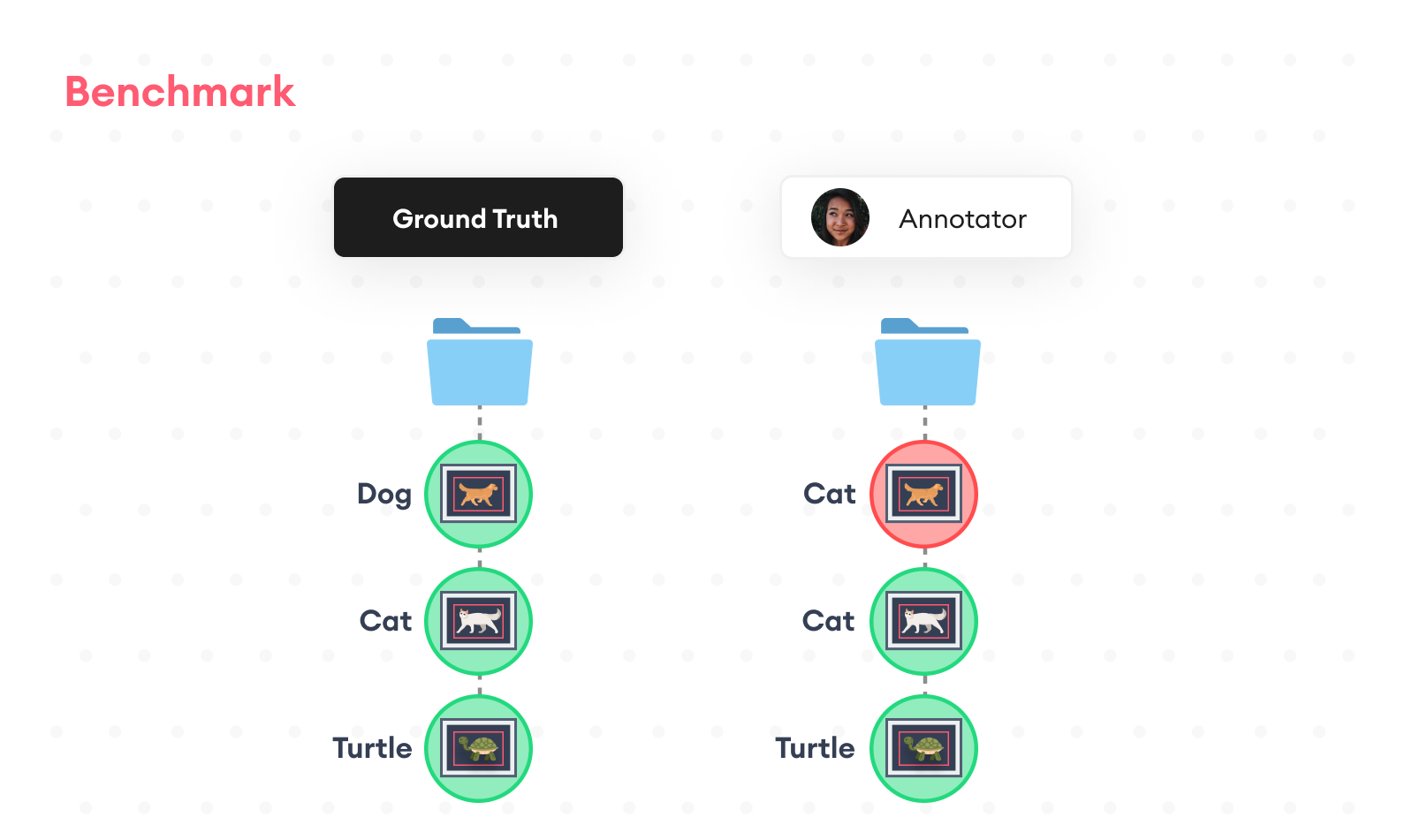

Benchmark

The benchmark tool allows you to compare instances to ground truth annotations. You can use the benchmarking tool in Image Projects for the following instance types: Bounding Box, Polygon, and Point.

How does it work?

You have a project in which there are several folders, all containing the same images. You assign each folder to an Annotator. When the Annotators are done annotating all the images, you upload a folder containing the ground truth annotations to the project. Then you calculate the benchmark.

Calculate the benchmark of Bounding Boxes and Polygons

- Match all the corresponding instances across all images and folders, including the ground truth folder.

- Compute the intersection over union (IoU) score between the instance and the matching ground truth instance.

Calculate the benchmark of Points

- Match all the corresponding instances across all images and folders, including the ground truth folder.

- Check if the instance matches the ground truth instance. If yes, then the score of the instance is 1. Otherwise, it’s 0.

SDK functions

When there's a predefined folder for ground truth annotations, use benchmark to compute the annotation quality against the ground truth:

benchmark_df = sa.benchmark(

project = "Project Name",

gt_folder = "Benchmark Annnotations",

folder_names = ["Team 1", "Team 2", "Team 3"],

export_root = "./exports")

A Pandas DataFrame with the exact same structure as the consensus computation is returned.

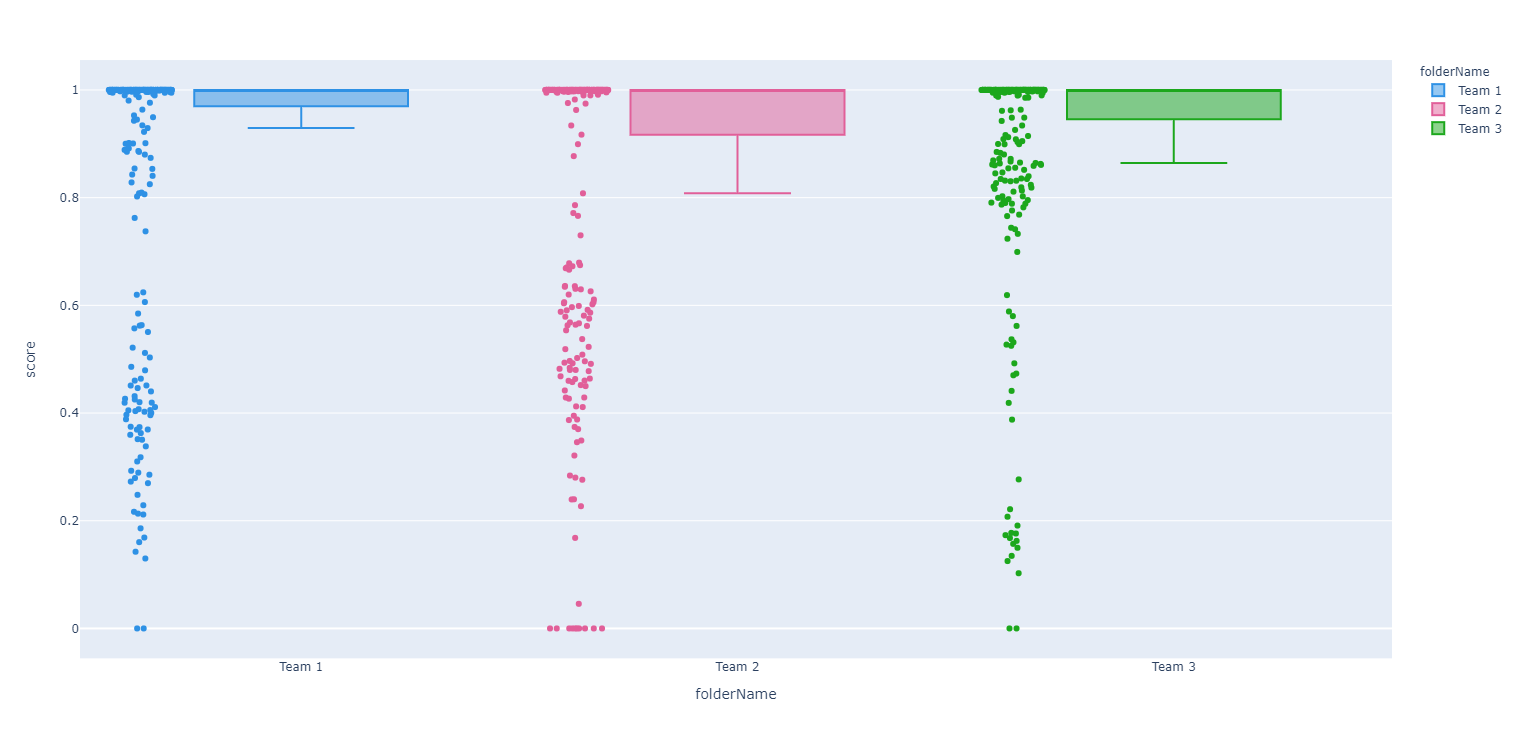

Similar plots are generated when show_plots is enabled:

benchmark_df = sa.benchmark(

project = "Project Name",

gt_folder = "Benchmark Annnotations",

folder_names = ["Team 1", "Team 2", "Team 3"],

export_root = "./exports",

show_plots = True)

Updated about 1 year ago